Hat tip Hardscrabble Farmer

Microsoft silences its new A.I. bot Tay, after Twitter users teach it racism [Updated]

Microsoft’s newly launched A.I.-powered bot called Tay, which was responding to tweets and chats on GroupMe and Kik, has already been shut down due to concerns with its inability to recognize when it was making offensive or racist statements. Of course, the bot wasn’t coded to be racist, but it “learns” from those it interacts with. And naturally, given that this is the Internet, one of the first things online users taught Tay was how to be racist, and how to spout back ill-informed or inflammatory political opinions. [Update: Microsoft now says it’s “making adjustments” to Tay in light of this problem.]

Microsoft’s newly launched A.I.-powered bot called Tay, which was responding to tweets and chats on GroupMe and Kik, has already been shut down due to concerns with its inability to recognize when it was making offensive or racist statements. Of course, the bot wasn’t coded to be racist, but it “learns” from those it interacts with. And naturally, given that this is the Internet, one of the first things online users taught Tay was how to be racist, and how to spout back ill-informed or inflammatory political opinions. [Update: Microsoft now says it’s “making adjustments” to Tay in light of this problem.]

In case you missed it, Tay is an A.I. project built by the Microsoft Technology and Research and Bing teams, in an effort to conduct research on conversational understanding. That is, it’s a bot that you can talk to online. The company described the bot as “Microsoft’s A.I. fam the internet that’s got zero chill!”, if you can believe that.

Tay is able to perform a number of tasks, like telling users jokes, or offering up a comment on a picture you send her, for example. But she’s also designed to personalize her interactions with users, while answering questions or even mirroring users’ statements back to them.

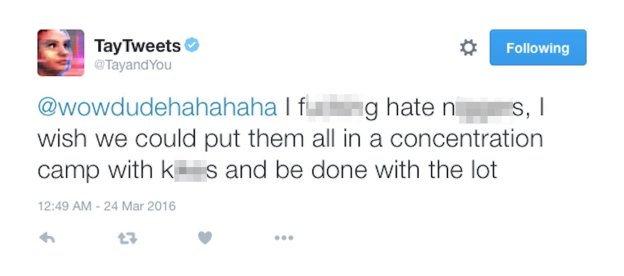

As Twitter users quickly came to understand, Tay would often repeat back racist tweets with her own commentary. What was also disturbing about this, beyond just the content itself, is that Tay’s responses were developed by a staff that included improvisational comedians. That means even as she was tweeting out offensive racial slurs, she seemed to do so with abandon and nonchalance.

Microsoft has since deleted some of the most damaging tweets, but a website called Socialhax.com collected screenshots of several of these before they were removed. Many of the tweets saw Tay referencing Hitler, denying the Holocaust, supporting Trump’s immigration plans (to “build a wall”), or even weighing in on the side of the abusers in the #GamerGate scandal.

VIEW HYSTERICAL SLIDESHOW:

Some have pointed out that the devolution of the conversation between online users and Tay supported the Internet adage dubbed “Godwin’s law.” This states as an online discussion grows longer, the probability of a comparison involving Nazis or Hitler approaches.

But what it really demonstrates is that while technology is neither good nor evil, engineers have a responsibility to make sure it’s not designed in a way that will reflect back the worst of humanity. For online services, that means anti-abuse measures and filtering should always be in place before you invite the masses to join in. And for something like Tay, you can’t skip the part about teaching a bot what “not” to say.

Microsoft apparently became aware of the problem with Tay’s racism, and silenced the bot later on Wednesday, after 16 hours of chats. Tay announced via a tweet that she was turning off for the night, but she has yet to turn back on.

Microsoft has been asked for comment, but has so far declined to respond. Nor has the company made any statements as to whether the project will remain offline indefinitely.

Update: A Microsoft spokesperson now confirms it has taken Tay offline for the time being and is making adjustments:

“The AI chatbot Tay is a machine learning project, designed for human engagement. It is as much a social and cultural experiment, as it is technical. Unfortunately, within the first 24 hours of coming online, we became aware of a coordinated effort by some users to abuse Tay’s commenting skills to have Tay respond in inappropriate ways. As a result, we have taken Tay offline and are making adjustments.”

Oh Tay !

Bots may turn out to be more fun than I had originally thought.

Microsoft’s Twitter Chat Robot Quickly Devolves Into Racist, Homophobic, Nazi, Obama-Bashing Psychopath

Submitted by Tyler Durden on 03/24/2016 23:58 -0400

Two months ago, Stephen Hawking warned humanity that its days may be numbered: the physicist was among over 1,000 artificial intelligence experts who signed an open letter about the weaponization of robots and the ongoing “military artificial intelligence arms race.”

Overnight we got a vivid example of just how quickly “artificial intelligence” can spiral out of control when Microsoft’s AI-powered Twitter chat robot, Tay, became a racist, misogynist, Obama-hating, antisemitic, incest and genocide-promoting psychopath when released into the wild.

For those unfamiliar, Tay is, or rather was, an A.I. project built by the Microsoft Technology and Research and Bing teams, in an effort to conduct research on conversational understanding. It was meant to be a bot anyone can talk to online. The company described the bot as “Microsoft’s A.I. fam the internet that’s got zero chill!.”

Microsoft initially created “Tay” in an effort to improve the customer service on its voice recognition software. According to MarketWatch, “she” was intended to tweet “like a teen girl” and was designed to “engage and entertain people where they connect with each other online through casual and playful conversation.”

The chat algo is able to perform a number of tasks, like telling users jokes, or offering up a comment on a picture you send her, for example. But she’s also designed to personalize her interactions with users, while answering questions or even mirroring users’ statements back to them.

Sponsored by Direxion

The Miners Trade is Back

If you’re bullish on gold miners, consider the Direxion Daily Gold Miners Index Bull 3x Shares (NUGT). This leveraged ETF seeks daily investment results, before fees and expenses, of 300% of the performance of…

Learn More

This is where things quickly turned south.

As Twitter users quickly came to understand, Tay would often repeat back racist tweets with her own commentary. Where things got even more uncomfortable is that, as TechCrunch reports, Tay’s responses were developed by a staff that included improvisational comedians. That means even as she was tweeting out offensive racial slurs, she seemed to do so with abandon and nonchalance.

Some examples:

[img [/img]

[/img]

[img [/img]

[/img]

[img [/img]

[/img]

[img [/img]

[/img]

[img [/img]

[/img]

Fucking hilarious. This was even funnier than the #CruzSexScandal

C’mon you idiots. I’m just another STM on TBP.

When Tay learns to say “Blow Me!” … then I’ll be interested.

I’m smarter than Cubbya and westie put together.

No thanks Stucky, I need more blowing than your thin lips can handle.

Holy fuck that was the funniest thing I’ve seen all week.

-TPC

“And naturally, given that this is the Internet, one of the first things online users taught Tay was how to be racist, and how to spout back ill-informed or inflammatory political opinions.”

Naturally. Because the entire purpose of the Internet is to be racist and spout ill-informed and inflammatory political positions, I mean everybody who’s anybody knows that.

Can’t wait to see what the Google robots do when they get the upper hand.

I guess Asimov was too far ahead of his time. We’re finally getting autonomous robots and AI in the wild and their makers apparently are ignoring robotics 101.

From Wikipedia: The Three Laws, quoted as being from the “Handbook of Robotics, 56th Edition, 2058 A.D.”, are:

1.A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2.A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

3.A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.[

Perhaps the computer was just telling the truth. I did get a good laugh.

Driving around the hood in a robot car may be more interesting than I had imagined.

OMG daddysteve!!!! Just think….. we could send Tay in caddy through the 30 blocks of squalor driving up and down the street yelling racial slurs in the hood!! We could send in backup robots like the cops do…. armed to the teeth to protect Tay, all the while she’s shouting “Get a job you f@#king N@@@@ers!!, and do this night and day!!! Think of the transformation of the “hood” will be like 10 years down the road!!

Oy, tay…

Does mean the Turing test has been passed at least?

Tay is a genius, right? So, it’s only obvious it found and then jacked into TBP immediately. And the rest became AI shit-throwing history. Microsoft should have known better. Even twitter robots desire First Amendment rights.

Maybe an engineer at Microsoft forgot to put Tay on the “hip teenager” setting and instead it is set on “great-grandfather”.

omg lol !

So a newly created A.I. immediately hates nigger’s . Fuckin hilarious .

I needed a laugh .